Ambient Stratagem: Dispatches from the Algorithmic Front - 15th June 2025

Week: 8–14 June 2025

ENTERING THE LOGIC LAYER

A lie told once is still a lie. A lie repeated by an autonomous swarm that wipes a satellite relay station is a doctrinal problem.

This was the week escalation went airborne and algorithmic. A high-intensity state-on-state conflict saw AI-assisted targeting, hybrid information warfare and thousands of drones cross from grey to flame. The logic layer is no longer theoretical. It’s executing.

THIS WEEK’S ALGORITHMIC FLASHPOINTS

Israel Conducts Coordinated AI-UAV Strike on Iranian Targets

Israel launched Operation Rising Lion, a pre-emptive strike involving AI-assisted drone teams, 200+ aircraft, and Mossad special operations, hitting over 100 strategic Iranian targets including nuclear and IRGC facilities. Iran retaliated with over 150 ballistic missiles and more than 100 drones.

Why it matters: This was the first confirmed example of human–AI teaming used in high-intensity conflict between states. Israel’s use of AI for ISR and targeting within a dense denial environment sets a precedent.

Doctrinal shift: Autonomous ISR and strike packages are no longer “grey zone” assets, they are part of full-spectrum war. The old assumption that AI assets were asymmetric tools has crumbled.

Duality AI and CoVar Join DARPA’s ASIMOV Ethics Program

DARPA enlisted Duality AI and CoVar to develop benchmarks for lawful and ethical battlefield autonomy as part of ASIMOV, a program explicitly focused on runtime decision-making constraints.

Why it matters: The US is shifting from theoretical debate to runtime enforcement. These benchmarks could define what autonomy is permitted under fire, an operational constraint with strategic significance.

Doctrinal shift: The “model-level control” era is ending. Runtime lawfulness—executed and auditable at the edge, is becoming the new operational baseline.

MIT Demonstrates Autonomous UAV Targeting Enhancements

MIT engineers revealed a new AI-based flight control system enabling drones to maintain targeting stability and adaptability in unpredictable combat conditions.

Why it matters: This brings AI-targeting closer to battlefield readiness. Precision autonomy in ISR and kinetic delivery removes dependence on satellite or operator link, critical in degraded environments.

Doctrinal shift: Validates the rise of platform-local AI, logic onboard, not hosted. ISR assumptions now hinge on survivability, not bandwidth.

Russia Refines “Information Confrontation Doctrine” with Cyber–Info–Kinetic Fusion

Analysts confirmed that Russian doctrine is operationalising hybrid warfare through integrated cyberattacks, false-flag sabotage, and cognitive domain operations. This week’s observed activity suggests Moscow is testing soft power escalation backed by hard capabilities.

Why it matters: Cyberwar is not just prelude. It is coordinated, layered, and designed for ambiguity. AI-generated information operations blur attribution while cyber actions degrade response.

Doctrinal shift: Russia no longer separates domains. Cyber, information, and kinetic are fused into a single escalation track—blurring Geneva thresholds and Western deterrence logic.

CNAS Report Reveals Massive AI-Chip Smuggling to China

On 11 June, the Center for a New American Security (CNAS) revealed that 10,000–300,000 high-end AI chips were illicitly transferred into China in 2024, circumventing export restrictions.

Why it matters: Technological containment strategies are not holding. Smuggled hardware undermines US control over military-use semiconductors.

Doctrinal shift: “Export control” as a lever of strategic power is weakening. Supply chain logic is no longer enforceable by paper law. Hardware leakage is now a live strategic vulnerability.

Huawei Capped at 200,000 AI Chips in 2025 by US Sanctions

The US Commerce Department confirmed new sanctions limiting Huawei’s AI chip production, capping capacity at 200,000 units, even as Huawei ramps domestic fab investments.

Why it matters: This is containment by bottleneck. It underscores a new strategy: limit adversary capability not by territory, but by denying scalable logic infrastructure.

Doctrinal shift: Strategic influence is now exerted through AI-capacity throttling, counting chips, not tanks.

NATO Integrates AI in Locked Shields Cyber Exercise

The NATO Cooperative Cyber Defence Centre of Excellence (CCDCOE) confirmed AI was deployed during Locked Shields 2025, using predictive analytics and simulated attacks to test alliance responses.

Why it matters: NATO is practising AI-enhanced cyber defence not as adjunct, but as core capability. AI is now integral to rapid threat characterisation and response tempo.

Doctrinal shift: Defence posture is adapting, anticipation, not reaction, is the doctrinal pivot. Cyber strategy is becoming runtime-led, not policy-lagged.

UK Defence Review Confirms Pivot to AI and Drones

The UK’s Strategic Defence Review formally announced a doctrinal reorientation, naming AI and autonomous drones as central to national security in a “new era of threat.”

Why it matters: Britain is shifting procurement, training, and posture around unmanned, embedded systems, suggesting peer war readiness, not just expeditionary logic.

Doctrinal shift: The Cold War model of human-centric force structure is being retired. Human-in-the-loop is being repurposed to human-on-the-loop.

SIGNALS IN THE NOISE

This was not a week of separate headlines, it was a week of convergence.

We are witnessing the transition from AI-assisted warfare to AI-structured conflict. AI is no longer simply a tool within systems; it is shaping the system logic itself, on the battlefield, in procurement decisions and in adversary doctrine.

Israel’s use of AI and drones in concert with special forces represents more than operational innovation. It signals the arrival of ambient warfare: layered, localised, and logical. Russia’s doctrinal fusion of cyber, information, and kinetic action continues to outpace Western bureaucratic silos. Meanwhile, NATO’s exercises and the UK’s procurement pivots show a growing awareness that runtime decision layers, not static rulesets, will define survivability.

What binds these threads is logic control, who defines it, who builds it, who enforces it. Whether in DARPA ethics protocols or in the smuggling of AI chips, the war for runtime advantage is fully underway.

PREDICTION PROTOCOL

Prediction 1: Expect PLA units to begin testing AI-led denial-of-signal operations against UAV swarms in Q3. Given US/NATO advances in autonomous ISR, China will accelerate counter-AI tactics based on 2023 cognitive EW experiments.

Prediction 2: Israel will formalise its runtime logic layer in procurement doctrine by Q4—possibly creating an integrated “Logic Operations Command” for AI/UAV orchestration. This stems from Rising Lion’s success and follows trends in US Indo-PACOM force restructuring.

BLACK BOX – THE HIDDEN SIGNAL

A barely-noticed footnote in DARPA’s ASIMOV partnership announcement mentioned an “Edge Audit Layer” capable of continuous lawful traceability of autonomous battlefield decisions.

This implies more than ethical AI. It suggests that the US intends to prove legality in near real-time. In future contested environments, legitimacy will be adjudicated not by treaty, but by audit trail.

This is the beginnings of wartime compliance infrastructure at the speed of runtime.

WHAT THIS MEANS FOR SURVIVABILITY

The survivability principle is shifting from hardened infrastructure to resilient logic. Drones can strike targets deep behind lines, adversaries can cross borders with packets not tanks, and AI systems can now operate detached from command.

We are entering an era where the defining feature of military advantage will be: Can your system operate lawfully, independently, and adaptively when blind, jammed, or lied to?

The new capability gap is runtime resilience, not in the cloud, but on the chip. At the edge. In the loop.

In the end, it won’t be firepower that decides this. It’ll be logic resilience.

A FUNNY THING HAPPENED IN THE GREY ZONE…

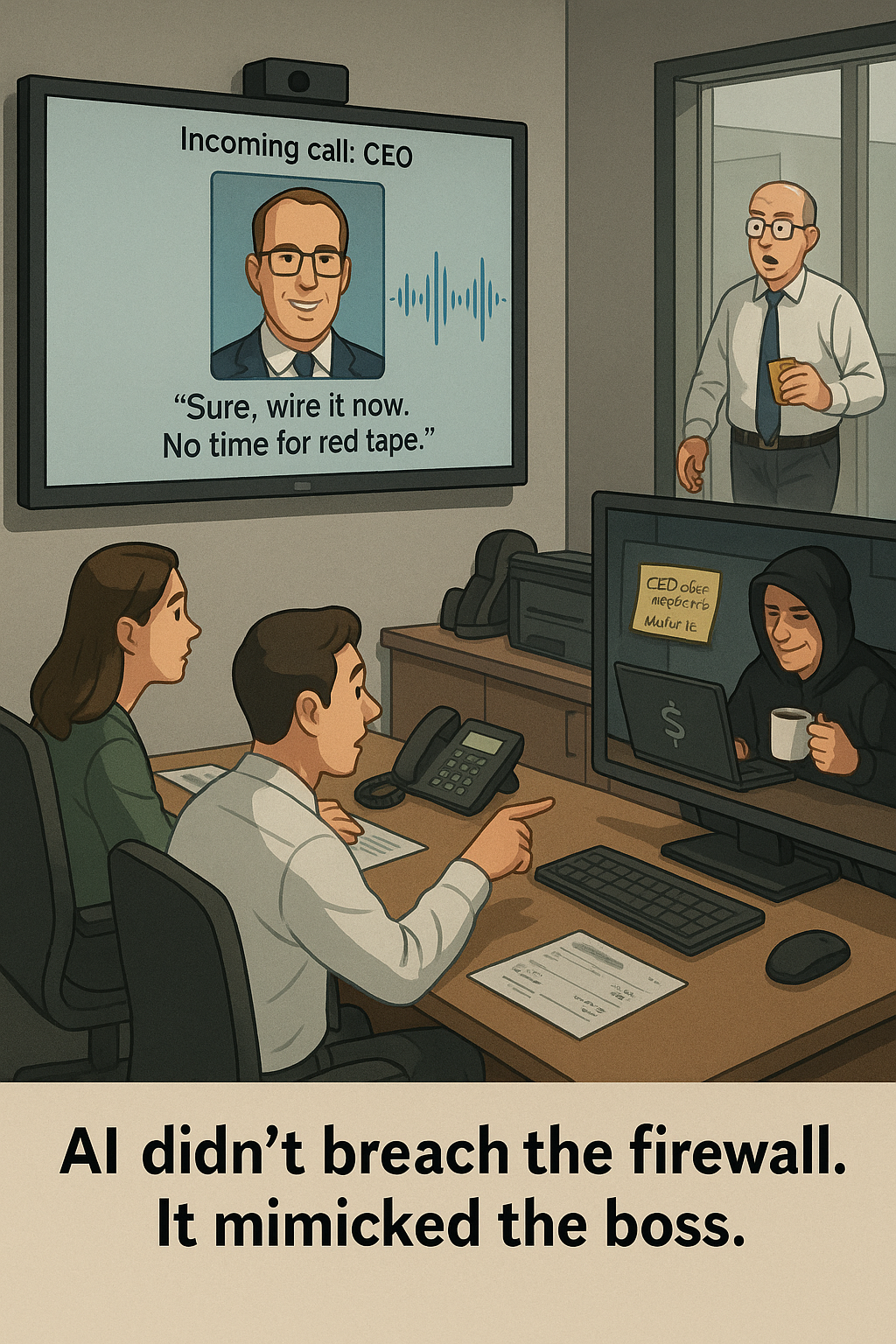

The This week’s standout unintentional comedy came via a true story highlighted by cybersecurity researchers and verified in late 2024–early 2025:

A UK energy company fell victim to an AI-powered CEO deepfake during a phishing call. Attackers used advanced voice-cloning technology to impersonate the CEO in a live audio meeting—and the organisation transferred £243,000 before realising something was amiss .

Why it’s absurd yet alarming:

The call sounded plausible enough that no one paused. The AI didn’t glitch mid-sentence, nor did it mispronounce a single word. A machine voice passed for a human exec—with money moving on cue.

Strategic tell:

What was once a niche prank has now morphed into boardroom-grade deception. In a world where digital voices become weapons, the toughest security isn’t firewalls, but who you think you’re talking to.

QUOTE OF THE WEEK

“The first rule of modern conflict is simple: you will lose access to the thing you thought you could rely on.”

— Gen. David Petraeus (remarks at Hudson Institute, 2024)

LATEST WHITE PAPER

48 Hours Without Human Control: A NATO GIA Misalignment Simulation

📤 Get this to someone who needs to see the terrain.

DISPATCH ENDS